By David Attwater, Senior Scientist, Talkmap

Over five billion people have access to the Internet today, and more and more of them are talking about Generative AI. In fact, it’s being used more and more by the second. Since the launch of ChatGPT in 2022, artificial intelligence has become a crucial part of what we talk about when inquiring about the future of technology. Accessible to novices and experts, adopted in the home and the workplace, Generative AI is rapidly becoming a part of everyday lives.

Gen AI is revolutionising the way we work and do business. One third of the organisations surveyed by McKinsey this year are regularly using Generative AI in “at least one business function”, while 40% said that they’re likely to increase investment in artificial intelligence overall, thanks to advances in Generative AI.

So, how do we take this information and apply it to the way we work?

This series aims to demystify Generative AI and Large Language Models, establishing what they are, how they work, and why they’ve become one of the most important innovations for businesses in recent years. This foundation will give us the better footing to explain when and how to use them in business.

What is Generative AI?

Generative AI is a type of artificial intelligence model, capable of producing different kinds of content, including text, imagery, audio, and synthetic data. Models can also be used in a variety of complexities from image generation and writing assistance to even helping with drug discovery!

Take DALL-E, a site well-known for image generation. If a user was to ask for “an armchair in the shape of an avocado,” for example, they might get back something like this:

Source: https://openai.com/research/dall-e December 2023

In this case, the AI has taken a prompt and passed it into a model which has been trained to map language to image generation, giving us these brunch-appropriate seating options.

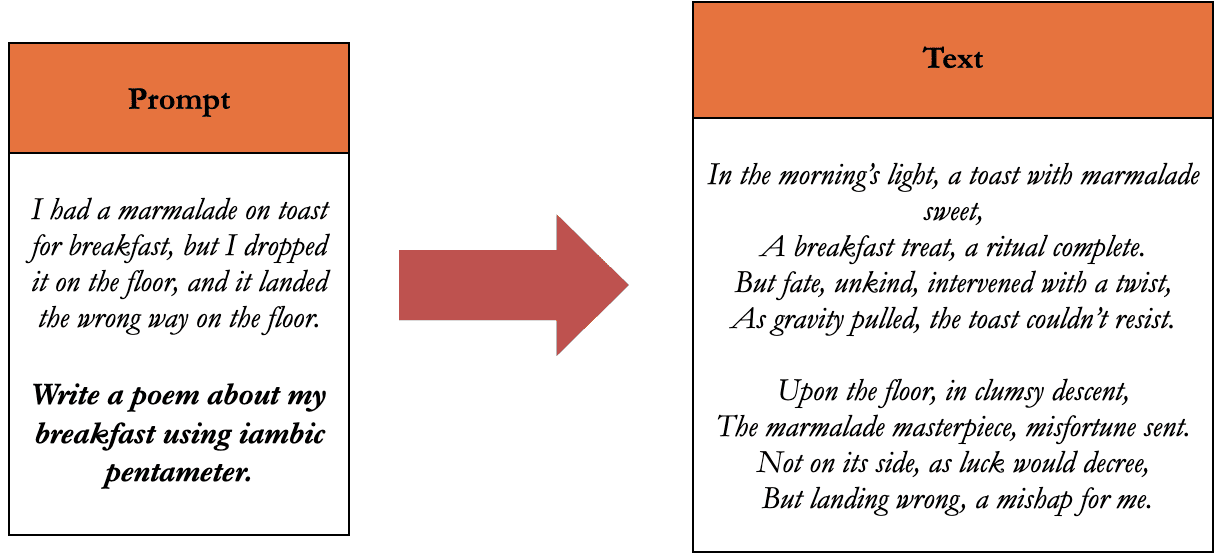

Let’s look at another example:

Here, the Generative AI uses a text-to-text model, otherwise known as a Large Language Model. Like the previous example, the AI takes a text prompt and turns it into a response – which in this case, is a poem.

What are Large Language Models?

A Large Language Model, or LLM, is an algorithm trained to perform a variety of natural language processing tasks. When given some input, it predicts the most probable next word, based on how it has been trained.

Modern models are trained on massive data sets using advanced machine learning algorithms. The technology, however, has been around for decades. In the nineties, language models were relatively simple statistical models, aided by some degree of language pattern analysis. In the early 2000s, these models then became more complex as widespread adoption of the internet expanded available training data. More recently, advancements in deep learning architecture have led to the development of generative pre-trained transformers – or GPT for short.

Simple Language Models

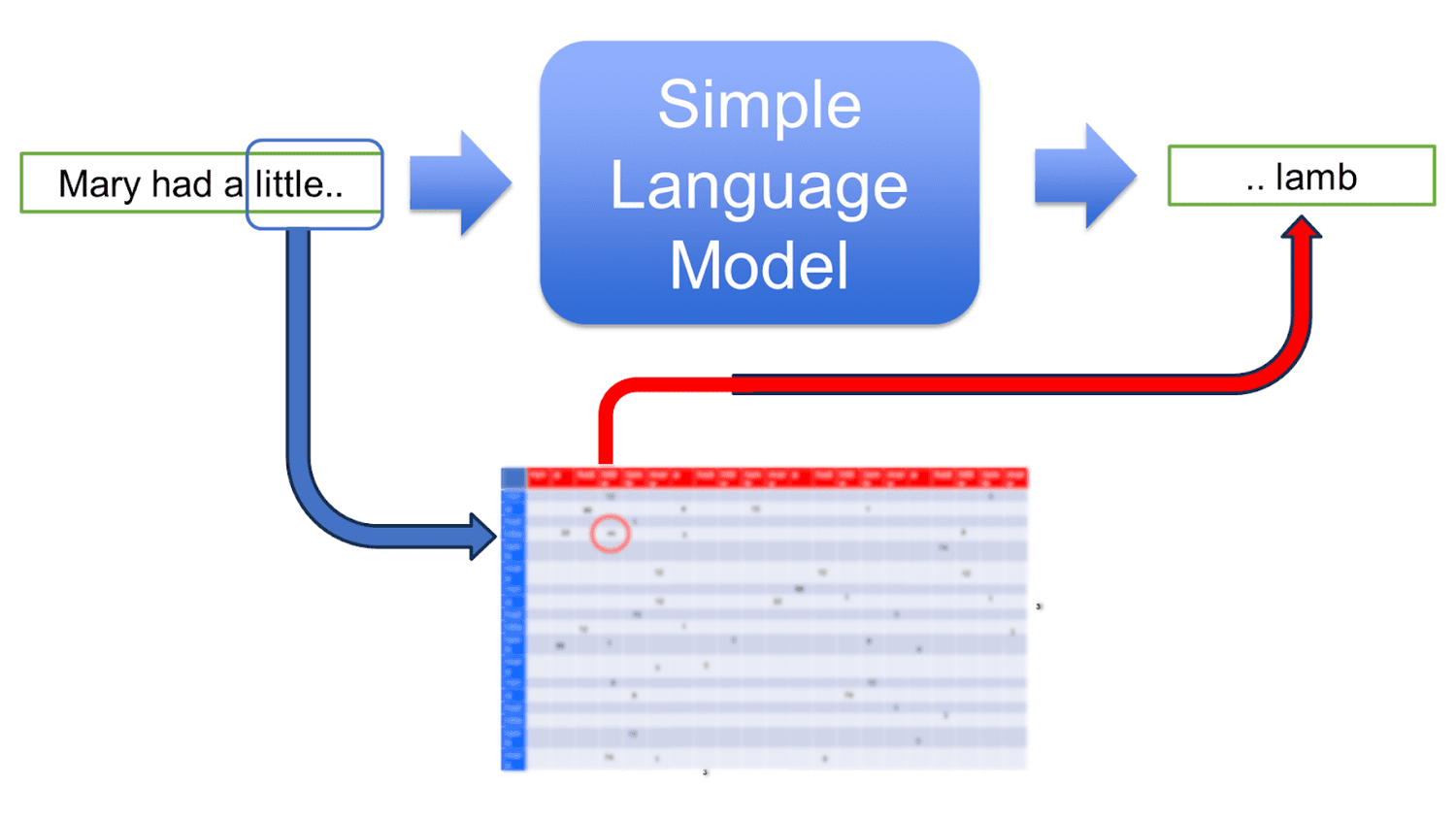

To understand how LLMs came to be, let’s start by looking at how one would train a basic language model, using a book of nursery rhymes as an example.

First, we look at the word sequences. These are the words which follow other word sequences in the book. Then we count these and build a big table of them.

The left column shows context, which is the word that’s just happened. Across the top, we’ve then got a list of all the words coming next. To build that table we simply go through the whole book and count which words follow which other words. Now imagine we want to find out what the most likely word to follow ‘Mary had a little..’. Our table only used a single word of context. Because we built this table using a book of nursery rhymes, when we look in the table for the word that follows ‘little’ the most often we find the word ‘lamb’.

A model like this – otherwise known as a bigram – is incredibly simple. It predicts the next word based on just the previous word. This is of course too simple for practical use but it can easily be scaled up by using in the context of the last two, three, or four words and building it using a very large number of texts.

Modern Language Models

How do we get from simple language models, which have been around for decades, to modern language models? To understand this, we need to incorporate deep learning.

The idea behind deep learning is to emulate the human brain. If each word is like a neuron, we want to build connections between words similar to how we form the neural networks in our minds. In this fashion, we can train the language models with a variety of text inputs, sometimes called tokens, to create a unique output based on new input. This process forms the basis of Generative AI.

Well-known foundational language models from Microsoft, OpenAI, and Facebook are all trained on billions and billions of text inputs. While a simple language model might look at just a few words, the current state-of-the-art models can take thousands of words as its input context.

However, it’s only recently that large language models have made the leap to creative generative tasks. So, how did we get here? The short answer is by showing models not only a huge number of instructions, but also what answers to those instructions might look like.

Why do Large Language Models matter to businesses?

As Bill Gates, co-founder of Microsoft, puts it:

“Generative AI has the potential to change the world in ways that we can’t even imagine. It has the power to create new ideas, products, and services that will make our lives easier, more productive, and more creative.”

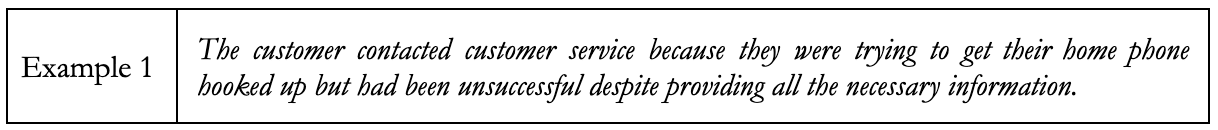

Take contact centres as an example. Customer conversations within contact centres contain a huge amount of locked-up information, which can be really hard to access. Large language models can now help with conversation analytics, revealing data previously difficult to access. Here, we have a case of a customer who has been unable to install their home phone. However, they don’t lead with this problem – they lead with the fact that they’re unhappy.

So, how do we input this into a Large Language Model?

In Example 1, the model has been asked: “Why did the customer contact customer service?”

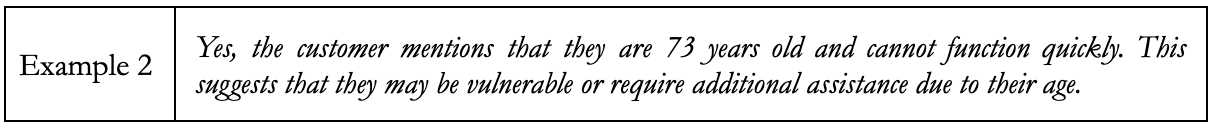

In Example 2, the model has been asked: “Does this customer mention anything that suggests they’re vulnerable?”

Whereas the first example gives a targeted summary of what the customer was trying to achieve, the second gives an explanation as well, which is characteristic of how LLMs work. Once a model has been trained with a question and an explanation, it has greater generative power than a model that is seeing questions or situations for the first time.

The use cases for a service like this in a contact centre are enormous. A large language model would be able to provide summaries of customer-agent conversations, detect vulnerable customers, make a note of promises made and products mentioned, as well as help with information retention and root cause analysis.

However, LLMs are not enough by themselves.

These models are simply designed to generate text, and as a result, do not care about the truth. This means that while many produce highly plausible output, it can be factually inaccurate. If you’re working with a provider who is using Large Language Models to do conversation analytics, it’s crucial that they pay attention to proper verification and cross-checking procedures.

There are also unique risks attached to Large Language Models. Generative AI in particular is capable of perpetuating cultural bias, while hosted-LLM services can carry data protection risks. Safeguards can help to mitigate these risks, such as anonymising customer conversations before data enters the network or running a secure cloud network.

Finally, Large Language Models often require augmentation with additional services because they work within an individual text. While a model might be able to perform single conversation analysis with ease, more curation – as well as enormous computing power – is needed to perform large scale analytics. Again, it’s important to work with a provider that is adept at using LLMs as part of the customer service enterprise.

Coming Up Next

Next in the series, we’ll be talking about what’s so hard about conversation analytics. Also on the horizon:

- How will intelligent chatbots and IVRs change your call centre?

- What really makes a good summary and is Generative AI up to the task?

- Just how risky is Generative AI?

David Attwater

Senior Scientist

Talkmap

LinkedIn